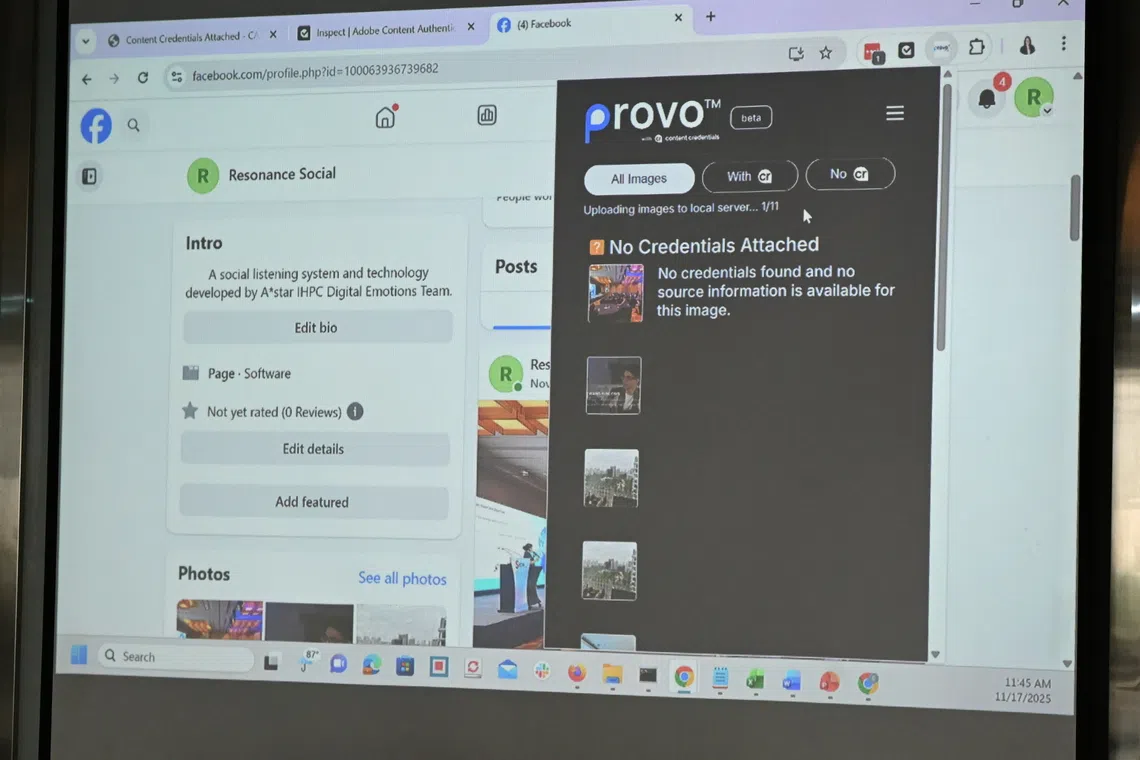

SINGAPORE – A free online tool will be launched in 2026 to help users discern between real and manipulated content via an inspection of its metadata, which are like nutrition labels on food products.

The tool, Provo, is meant for users, including news publishers and content creators, to embed labels in images and videos. The labels can be inspected by anyone using the free Provo browser extension.

Provo is among the first tools unveiled by the Centre for Advanced Technologies in Online Safety (CATOS), set up in 2024 to hone Singapore’s expertise in detecting deepfakes and online misinformation.

Hosted by the Agency for Science, Technology and Research (A*STAR), the centre will receive $50 million in funding over five years under Singapore’s Research, Innovation and Enterprise 2025 Plan.

Speaking to The Straits Times, CATOS director Yang Yinping said that people should no longer rely on telltale signs they have been taught in the past to spot deepfakes.

“Unnatural blinking and blurring of the mouth – such tips might have worked in the past. But with the improvement of AI-generation techniques, even the world’s best tools cannot consistently detect these very realistic deepfakes,” said Dr Yang, who is also a senior principal scientist at A*STAR’s Institute of High Performance Computing (IHPC).

So verifying the source of content with tools such as Provo is a necessary complement to the continuous development of deepfake recognition technology, which she compared to a “never-ending cat-and-mouse game”.

The labels embedded in photos and videos online can be inspected by internet users regardless of where the content is reposted or shared.

The labels carry metadata such as the original publisher, the date the content was first posted, and any edits made before the content was posted.

If a photo or video has been manipulated by an unauthorised party, these labels that mark its authenticity will be automatically removed.

Provo will show users the metadata of content found online, such as the original publisher and the date it was first posted.

PHOTO: COURTESY OF CENTRE FOR ADVANCED TECHNOLOGIES IN ONLINE SAFETY

Development of Provo began after CATOS and American software giant Adobe signed an agreement in 2024 to implement content provenance technologies here.

More details of its launch are expected in May 2026, said Ms Therese Quieta, head of systems engineering at CATOS.

Dr Yang said: “We see (Provo) as a hope that together with deepfake detection technology, these tools can contribute to the scene of maintaining information integrity on the internet.”

Although content provenance technology is in its early days in the region, she added that it can possibly play a key role in how digital information is displayed in the future.

As trust is at the core of Provo’s workings, the team is working out the identity verification process so that it is impossible for someone to impersonate an organisation or another individual when using the platform, said Ms Quieta. She added that the team might consider integrating Singpass verification, which would allow the platform to authenticate users based on government records.

To create an essential trust ecosystem, the team at CATOS is also in talks with content creators and leading publishers to use Provo.

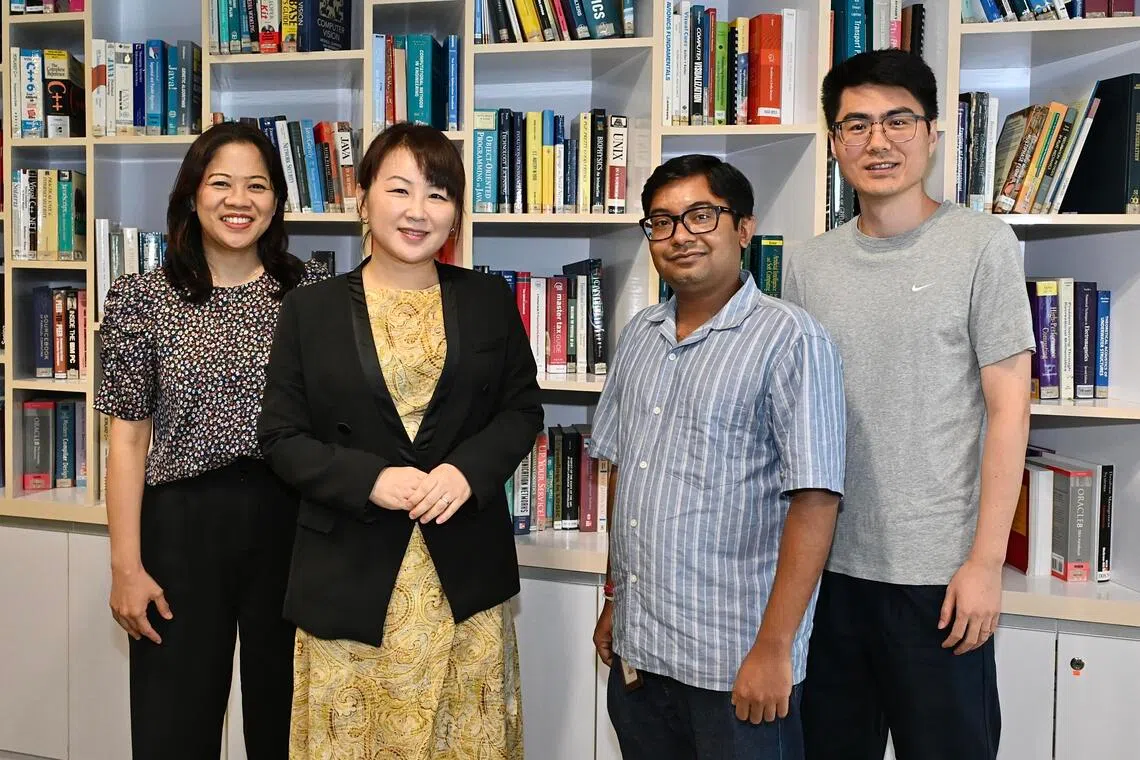

CATOS’ team includes (from left) head of systems engineering Therese Quieta, director Yang Yinping, principal scientist Soumik Mondal, and senior scientist Wang Yunlong.

ST PHOTO: DESMOND WEE

CATOS has also rolled out a deepfake recognition platform, called Sleuth, which employees of some public agencies and media companies have begun using to spot manipulated content.

Sleuth works by analysing pixel-level inconsistencies introduced during the manipulation process which the human eye cannot detect, said CATOS principal scientist Soumik Mondal.

With these anomalies, the software is able to decide whether faces have been swopped or manipulated, or the audio and environment have been AI-generated. A frame-by-frame analysis of the video is able to break down specific parts of the video that are manipulated.

The 50-strong team of scientists and engineers at CATOS is working towards potentially releasing Sleuth for public use.

“We need to have the infrastructure to handle the volume of content, as we are talking about a population of around five million people,” said Dr Mondal.

For such a tool to be made available to the masses, it is also a question of whether the public will be able to accept possible judgment errors.

“Even if it is at 99 per cent accuracy, that 1 per cent that Sleuth is wrong can be catastrophic if we roll it out to everyone in Singapore,” said Dr Mondal.

The CATOS team will also be expanding its efforts in curating a series of public education materials that teaches about AI literacy, said Dr Yang.

Checking sources and having a trusted adult to speak to are key when one encounters suspicious content, said CATOS director Yang Yinping.

ST PHOTO: DESMOND WEE

Given the modern tools available to generate realistic deepfakes, her advice for the public is to build a habit of checking source credibility, and not judge based on content.

“To me, the missing link in the real world is also whether we have a trusted adult we can talk to,” said Dr Yang.

“Individually, we cannot make 100 per cent accurate judgments, especially if content is very interesting and engaging. But with good relationships, we can at least communicate with someone we trust.”